What future for autonomous weapons

27 February 2020 | Written by La redazione

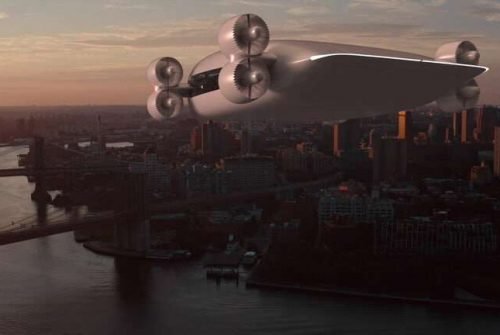

Seven countries are developing autonomous weapons, if it cannot be prevented, common regulation must be developed

Autonomous weapons inspire fear, weapon systems capable of recognizing, hiring and killing a person without the intervention of a man in the process. To date, seven countries, the United States, China, Russia, the United Kingdom, France, Israel and South Korea, are developing this technology. Similar weapon systems already exist but, as in the case of U.S. military drones, they require a human operator to confirm recognition and physically pull the trigger. With the development of artificial intelligence and facial recognition systems, the day on which even that last step will be entrusted to the machines is not too far away, creating the first real autonomous killing machine. Do we really want such a technology to be developed?

The open questions. Are autonomous weapon systems (in English AWS) a violation of human rights? What about the Geneva Convention? Who is responsible for their actions? We are faced with semantic as well as technological issues. Weapons are tools, extensions of the will, in this case the will to harm, of man. If tools from objects become subjects, what changes not only in the way in which war is waged but also in how one approaches all that series of norms and rights linked to war actions? The open questions are manifold and must be addressed with a critical spirit and with a wealth of details to prevent the development of autonomous weapons, if it must be allowed, from getting out of hand.