The ethical dilemma of autonomous weapons

10 December 2019 | Written by La redazione

Giant steps in the fields of robotics and artificial intelligence bring out dilemmas about the use and risks of these technologies

The Massachusetts bomb squad has been using Boston Dynamics SpotMini robots in their operations for several months. They are used as mobile support platforms or to monitor dangerous situations. It has not been made known what level of autonomy there was behind the actions of the robots, if they followed the dictates of their artificial intelligence algorithm to manage operations or if there was an agent to control their movements, the fact remains that a certain degree autonomy of the robot was present. What if one day it was the police department that wanted SpotMini? And if these were equipped with a weapon? The ethical discourse on autonomous weapons must start now, before the technology can be developed in undesirable directions.

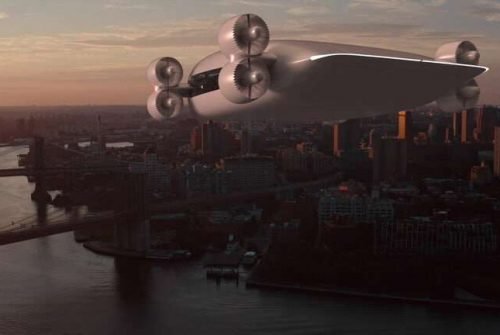

Autonomous machines. When we talk about autonomous machines we mean artificial intelligence systems, maybe applied to robotics, able to make decisions. An algorithm capable, for example, of identifying a given image in fact “decides” whether what is shown to it is the image in question. In a recently published article in The Conversation, Christoffer Heckman, professor of Computer Science at the University of Colorado Boulder, expresses important considerations on the question: in 2010, – says Heckman – image recognition algorithms had a success rate of 50 % around, today we are around 86%. This means two things: these algorithms improve with an exponential rate and become more and more effective, moreover, the more the effectiveness of the machines increases, the greater the probability that the human being will be removed from the equation. As long as it is a question of recognizing one image among many – he continues – the problem does not seem serious, but if it were a question of identifying a specific person, a dangerous suspect perhaps, the absence of man in the process could be the edge of a slope slippery, especially if the robot was equipped with weapons.

Within everyone’s reach. The development of the autonomous machines therefore runs fast and on the wings of the network: the Internet allows a rapid and consistent exchange of skills and techniques, such that if once it was limited to the experts, platforms are now being created that through tutorials and DIY guides on how to develop their own AI allow people who have no computer knowledge to learn some of the tools to train a cutting-edge autonomous system. How difficult could it be for any attacker to acquire these skills and train an AI to fly a drone armed with explosives?

Managing autonomous weapons. The technology behind systems capable of selecting and hitting targets without human intervention already exists and the development of autonomous weapons, from a technical point of view, may not be too far away. It is essential, therefore, to build a collective debate around the topic on their possible ban. Several researchers, experts and ethical scholars are questioning the question. According to Professor Heckman there are three possible approaches: one could try to manage this through strict regulation, but given the ease with which these systems can be developed on their own the effectiveness of these measures would certainly be limited.

Another approach could be self-management, the researchers involved in the development of the autonomous machines could meet and try to give themselves some guidelines but the question would be delicate, the technologies behind these devices often have uses that are not only peaceful but also extremely useful.

Still, we could develop an organ that manages and supersedes studies and research on the subject, but this would mean creating a supranational body whose action space would correspond to what each state would provide to it.

The scientific community has already expressed itself. Regardless of the possible approach, the question remains of very strong interest for the scientific community: last February a letter was published by a group of scientists who requested the banning of research and development of autonomous weapon systems controlled by artificial intelligence. What is important, however, is that this discourse comes out of the laboratories and that it also involves those who do not deal with these issues in first person: the future of autonomous machines is in our hands, to guide them towards the best of possible futures we must only do hear your own voice.