According to the International Conference on Machine Learning, artificial intelligence systems could easily become the prey of a new generation of hackers.

26 July 2018 | Written by La redazione

According to the International Conference on Machine Learning, artificial intelligence systems could easily become the prey of a new generation of hackers.

Distinguishing a turtle from a shotgun, or a cup of coffee from a 3D printed baseball should not be complicated, especially for the sophisticated artificial intelligence systems that are progressively entering our lives. But apparently AI can be disoriented more easily than expected, and a new generation of hackers could put at risk the security of this technology.

According to Science, the ICML, International Conference on Machine Learning, warned the entire scientific community that deals with artificial intelligence. It emerged, for example, that it is sufficient to alter an image, even by making minimal changes, to “confuse” the AI, which may no longer recognize what it sees or even exchange it for something else. And this does not only apply to images, but also to three-dimensional sounds and objects.

Some research groups have already started to run for cover: among these the Massachusetts Institute of Technology that, in addition to subjecting the AI to some tests, is trying to develop countermeasures.

How to manipulate an AI? There are many ways, and for some of these you don’t need to be a computer hacker or an expert programmer. To confuse the artificial intelligence of a self-driving car, for example, it is enough to have some stickers at hand: last year two teams of researchers from the universities of Berkeley and Washington, applied some stickers on road signs, and manage to deceive the car that confused the stop sign with a speed limit.

More complex, but still easy to understand, the technique developed by a group of MIT researchers: the team, led by Anish Athalye, developed a software capable of deceiving the image recognition systems by exploiting a known type of cyber-attack as adversarial example. Through a software the original image is altered one pixel at a time and submitted to the recognition system to be classified after every single modification. Each change, however, is minimal compared to the previous version and therefore the artificial intelligence system continues to classify the image as analogous to the original one, even when, after thousands of small variations, the content is completely different. This technique, as mentioned, was already known but thanks to the software developed by MIT researchers it can be applied much faster and more effectively.

It’s time to change the perspective. “We need to completely rethink the machine learning concept to make it safer”. MIT’s researcher Aleksander Madry stated, with whom also agreed other AI researchers and experts around the world.

These attacks on artificial intelligence systems, in fact, can be fundamental to reveal new mechanisms of action of neural networks and to fully understand the functioning of automatic learning. Identifying and addressing risks is the only way to predict and avoid them.

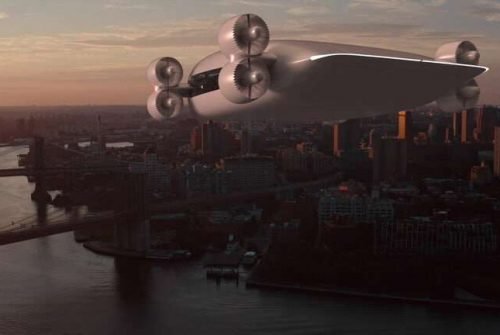

Artificial Intelligence is evolving at great speed and even its applications in everyday life are always greater. But before these systems are entrusted with delicate tasks and in direct contact with man (such as driving a car or public transport) it is necessary to make them less vulnerable.