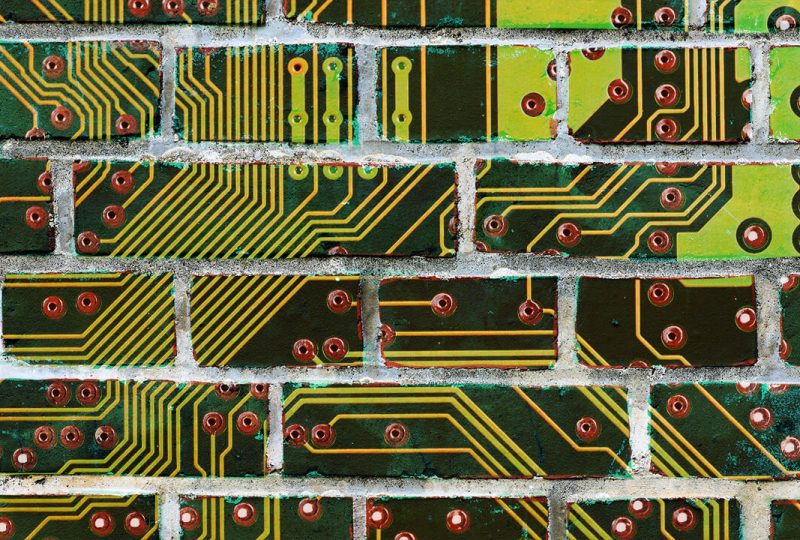

The memory wall

20 June 2019 | Written by La redazione

A wall slows down computer performance, it's not a physical barrier but a computational one.

For those who are passionate about the world of computing, the concept will not be new, but probably anyone who uses a computer has come across this phenomenon without knowing it. It is called memory wall and is a concept theorized in 1994 by William Wulf and Sally McKee, two computer scientists from Virginia University. This barrier leads to a decline in the performance of our computers – marginally – and of the supercomputers used in the research. Solutions are needed and a group of Greek scientists could be on the right track to tear down this wall and to do so it uses light.

The paradox, born from the observations of the performance development trends of the components used in computers, indicates that the capabilities of the processors, the brains of the computers that carry out the operations, grow at a speed of about 55% every year, in contrast, the capacities of the RAM – Random Access Memory – or the short-term memory that is used by the processors to perform the calculations, grows by a meager 9-10% per year.

The consequences. The two components, therefore, grow at profoundly different and divergent speeds: with the passage of time the speed of calculation of the processors moves further and further away from that of the RAM and this creates a problem. Since the processors have to access the RAM to perform the operations, no matter how fast the processors are, if the RAM does not keep pace, the entire system is slowed down.

The problem is well known in the computer world and solutions are sought economically accessible that will be of fundamental importance given the surge of big data and their analysis: an increasingly widespread area that has impacts in many sectors, from the development of artificial intelligence to the management of databases in the medical field, through climate studies that need huge amounts of calculations to simulate climatic trends and allow us to better prepare ourselves for the meteorological instabilities that could characterize the coming years.

Where the wall stands. In the computers we use normally, the one on which you may be reading this article, the wall is not a serious problem. The performances required to stay on Facebook, watch the last episode of our favorite TV series, or play video games, are not heavily affected by this bottleneck that is created in the communication between RAM and CPU (the processor). The wall becomes a problem in large computer systems, in supercomputers used in research, in big data analysis, and in simulations. These machines grind monstrous amounts of data and, given their high energy consumption, every second of operation leads to a higher cost of operation.

Breaking down the wall. There are systems and algorithms that, at least in part, are able to reduce the problem, but the computational limit remains. The search for new solutions is therefore very important, both on the side of software optimization and on the hardware side. A Greek research team could be on the right track thanks to the development of an optical RAM, which uses light instead of electricity, which can reach speeds of 10 gigabits per second.

Watch the video: